The case (the real case) is Mata v. Avianca, Inc.

I need to do my little bit in reminding people that ChatGPT (so called "AI") is not a reliable source.

This video looks into the case of a lawyer who relied on a search for previous related cases carried out through ChatGPT. It's probably not a surprise that ChatGPT, always so eager to be of use to its inquisitors, was able to "find" a number of cases, which it cited. The searcher gave this presumed body of case law to the lawyer, who presented them to the court, apparently without checking the citations existed and said what they were claimed to say.

The opposing lawyers looked them up in the cited documents, only to find they did not exist. They wrote to the judge giving their findings and now the lawyer is being required to go to court himself to justify his actions.

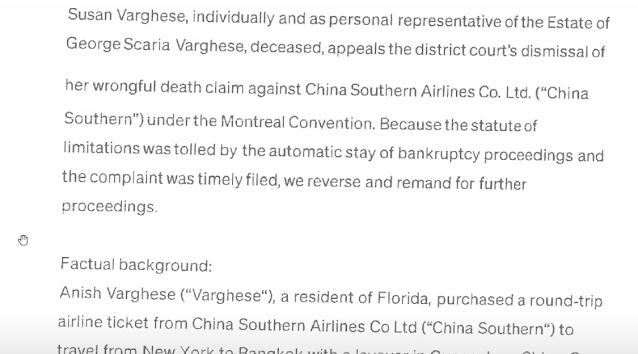

The video goes into details, some of which are quite amusing, since ChatGPT does not actually "understand" what it is writing. In one case, the "searched" document discusses a wrongful death lawsuit brought by Susan Varghese as personal representative of deceased George Varghese.

But the "citation" goes on to say:

Anish Varghese (who's he?) was denied boarding due to overbooking, missed his flight and is alleging breach of contract.

So, hardly a wrongful death suit.

The whole video is worth watching to see what "mistakes were made" in relying on the output of AI text generators without extensive checks.

PS this post isn't legal advice and I am not a lawyer.

No comments:

Post a Comment